VR level editor with OpenAI voice commands

Details:

- VR level editor.

- Voice commands to create objects.

- Custom interaction system to move, rotate and scale objects.

- Using the game engine StereoKit with C#.

- OpenAI API to convert text into JSON.

- Github link.

The user can use voice commands to create, remove, and duplicate objects, and use their hands to move, rotate, and scale objects. This is made possible by the OpenAI API, which allows me to convert spoken text to JSON and read it at runtime.

In this video I create a snowman⛄

Why?

I had the idea to create a custom game engine for virtual reality, utilizing OpenXR and DirectX 11 as the graphics API. However, upon researching example projects online, I discovered that the scope was too large for me. Fortunately, I came across an article written by the same person who made the original example project. It described a custom game engine, called StereoKit, specifically designed for virtual reality. I decided to start creating simple projects with it, but still had difficulty coming up with a larger project idea.

Then, I was introduced into OpenAI's ChatGPT, and was fascinated by its ability to almost understand human conversation. I began to think about what sort of project I could make with OpenAI's API, but still couldn't come up with an idea. Suddenly, it hit me: I could create a VR-level editor in StereoKit, where users could create objects using their voice with the help of OpenAI's API. This was an exciting project, as it would allow me to learn how to use both StereoKit and OpenAI's API.

What can the editor do?

The user can create objects in the right and left hand.

Voice command: Hey computer, create a red cube in my right hand and two blue spheres in left hand.

The user can duplicate and remove objects that he is grabbing.

Voice command: Hey computer remove the object I have in my right hand, but duplicates the one in my left hand

The user can move, rotate and scale the objects. With the scaling coordinate system the user can choose to scale the object on all or just one axis.

The AI supports very long voice commands.

Voice command: Hey computer create 1 blue cube in my right hand 2 white sphere in my left hand 3 yellow cylinders in my right and 4 brown cubes in my left hand 5 blue cylinders in my right hand and 1 red cube in my left hand

The AI has an understanding of when the user says something that does not make sense.

Voice command: Hey computer, I like melons

AI response: I'm glad to hear that, but is there anything I can assist you with regarding creating or manipulating virtual objects?

How it works:

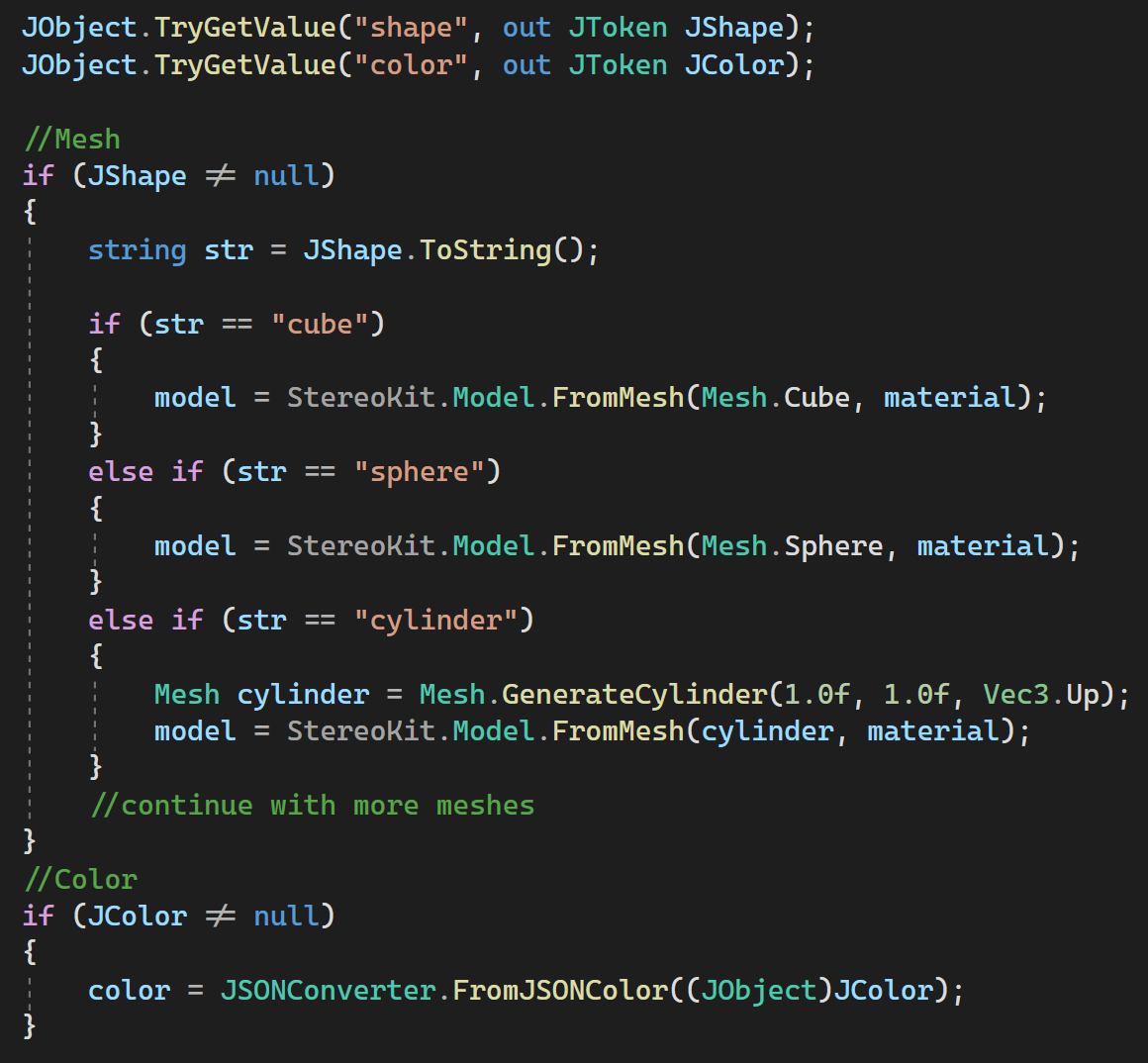

I utilize the open-source VR game engine StereoKit to display the virtual world. To interpret the user's spoken words, I use Microsoft Azure Cloud Services to transform them into text. To further process the text into a JSON block, I use one of OpenAI's text models. OpenAI's text models have been trained on a vast array of internet-sourced data, making them incredibly effective tools for understanding and converting text from one format to another. Below is an example of what JSON block gets generated from the user text:

Voice command: Hey computer, create a red cube in my right hand

AI response:{"add objects": [{"count": 1, "hand": "right", "shape": "cube", "color": {"r": 1.0, "g": 0.0, "b": 0.0}}]}The JSON values are easy to read in C# and by looking at its values I'm able to create the objects.

To ensure the model is able to accurately generate the intended JSON, I provide it with example text as a training resource. After the model is trained with these examples, it is able to recognize and generate appropriate responses for new text it has never seen before. Below is an example of the text I initially provide the model:

User: create three blue cube cubes and two white spheres on my left hand

Assistant: {"add objects": [{"count": 3, "hand": "right", "shape": "cube", "color": {"r": 0.0, "g": 0.0, "b": 1.0}}, {"count": 2, "hand": "left", "shape": "sphere", "color": {"r": 1.0, "g": 1.0, "b": 1.0}}]}

User: Remove the object in the right hand. Grabbing object with right hand

Assistant: {"remove" : ["right"]}

Support: Convert the user message to JSON. Only respond with the JSON object. Do not apologise or write any normal text

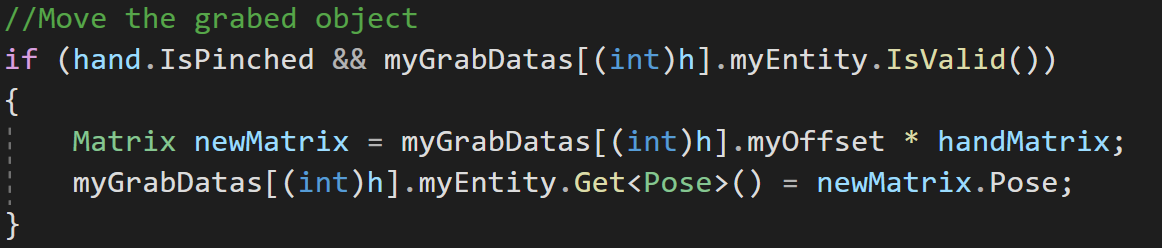

Interaction system to grab, rotate and scale.

The StereoKit engine doesn't have an integrated parenting system, so I had to calculate my own matrix math to parent the object to the user's hand while allowing them to scale objects independently on each axis. To ensure the coordinate system always appeared on top of the object, I first rendered it using the DepthTest.Always setting. However, this caused some vertexes to be rendered in the wrong order, so I rendered the coordinate system a second time with DepthTest.Less to display the vertexes in the right order.

3D artist user test

The 3D artist Markus Molid used my level editor to create a tree. During user testing, I identified several bugs and received valuable feedback on how to improve the user experience. For instance, Markus was very kind to the AI, resulting in a response of both text and JSON. At the time, my editor only supported AI responses in full JSON format.

Voice command: Hey, computer can you create 5 Peach colored spheres?

AI response: Sure, here's the command to create 5 peach-colored spheres:

{"add objects":[{"count":5, "shape": "sphere", "color":{"r:" 1.0, "g": 0.8, "b":0.6}}]}